Twitter-API-Data-Wrangling-and-EDA-WeRateDogs

Project Overview

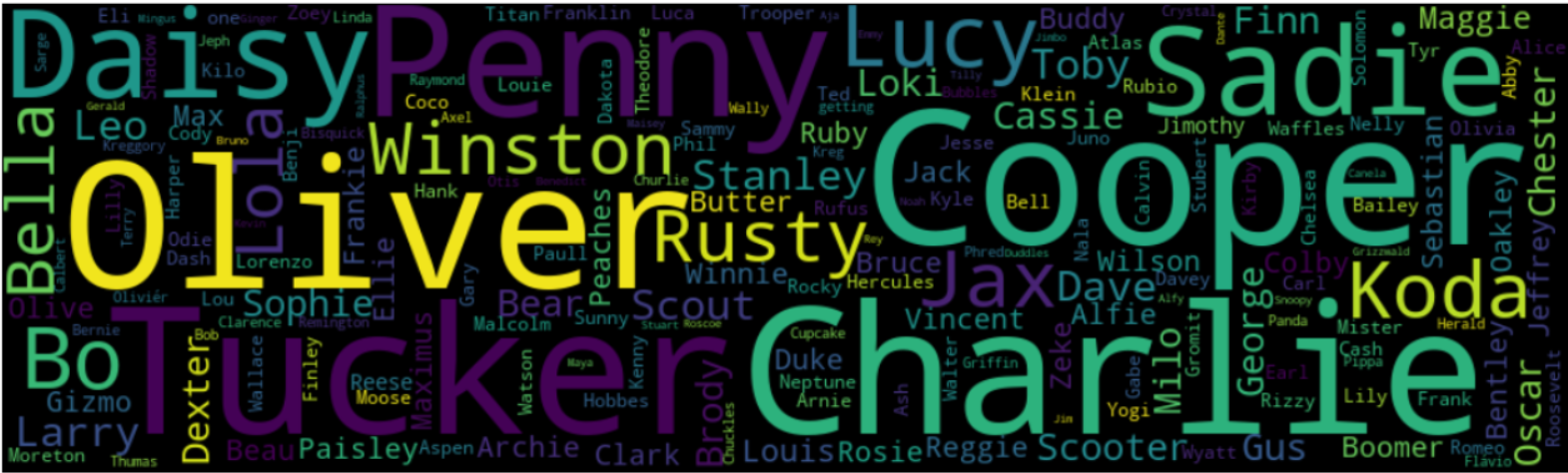

The dataset used in this data wrangling (and analyzing and visualizing) project is the tweet archive of Twitter user @dog_rates, also known as WeRateDogs. WeRateDogs is a Twitter account that rates people's dogs with a humorous comment about the dog. These ratings almost always have a denominator of 10. The numerators, though? Almost always greater than 10. 11/10, 12/10, 13/10, etc. Why? Because "they're good dogs Brent." WeRateDogs has over 4 million followers and has received international media coverage.

Tools Used

Python (Matplotlib, seaborn, Tweepy, pandas, numpy)

Twitter API

Wrangling Objective

To assess these data and check for quality and tidyness issue.

To clean these identified issues, merge and produce a clean data for analysis.

Data Gathering

Three different data was gathered in this stage, all from three different data sources

Directly download the WeRateDogs Twitter archive data (twitter_archive_enhanced.csv): This data was already given to be downloaded locally. This was loaded into the notebook with pandas read_csv command.

Tweet image prediction (image_predictions.tsv): This data contains the tweet image predictions of the dog. It is hosted on Udacity's servers and was to be downloaded using the python Requests library, as the url link of the data was given.

Additional data via the Twitter API (tweet_json.txt): This data requires me to gather each tweet's retweet count and favorite ("like") count at the minimum. Using the tweet IDs in the WeRateDogs Twitter archive, the Twitter API was queried for each tweet's JSON data using Python's Tweepy library and each tweet's was stored in the form of a JSON data called tweet_json.txt file.

To query the Twitter API, this process required me to have a Twitter elevated account authentication details, which i applied for and was approved in a couple of hours

Data Assessment

This stage, i applied both visual and programmatic assessment to identify and list our various quality and tidyness issue in the data. These are:

Quality Issues

twitter_archive table

tweet text column should only contain the text

Some entries are not original tweets (replies and retweets)

Drop columns that are essentially empty

Timestamp column is not in appropriate format

Ratings not extracted correctly (decimal ratings)

Rating_numerator column contain outlier values (1776, 204,0)

Ratings_denominator column has values more or less than 10

Some dogs name were incorrectly entered (a, an, the)

image_pred table

Get the final prediction of the dog breed from the two predicted values

Capitalize each dog name

tweet_rem table

- rename 'id' column to 'tweet_id'

Tidiness issues

Dog stages should be combined to one column

Merge three DataFrame to One Master dataFrame

Code

The full project is hosted on GitHub found here

https://github.com/Genbodmas/Twitter-API-Data-Wrangling-and-EDA-Udacity-Data-Analytics-Nanodegree